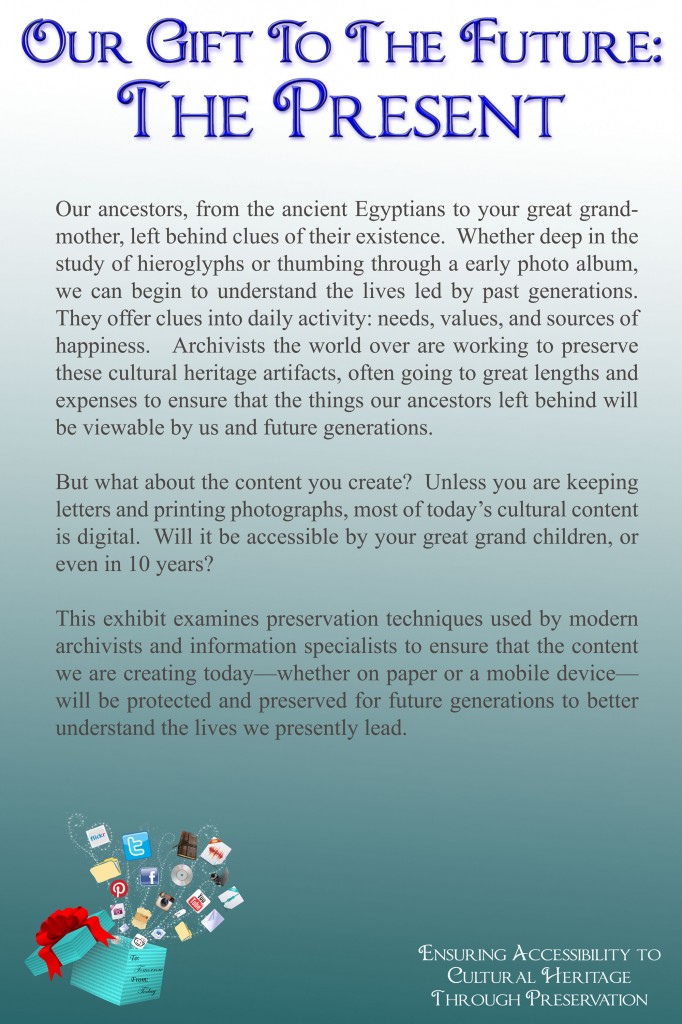

I gave a 45 minute presentation on digital preservation to about 50 faculty and administrators on my campus recently. When running into some of them since then, the thing that’s proven to be memorable is a mathematical description I created for DP and shortened to “4 Ds and 1 M.”

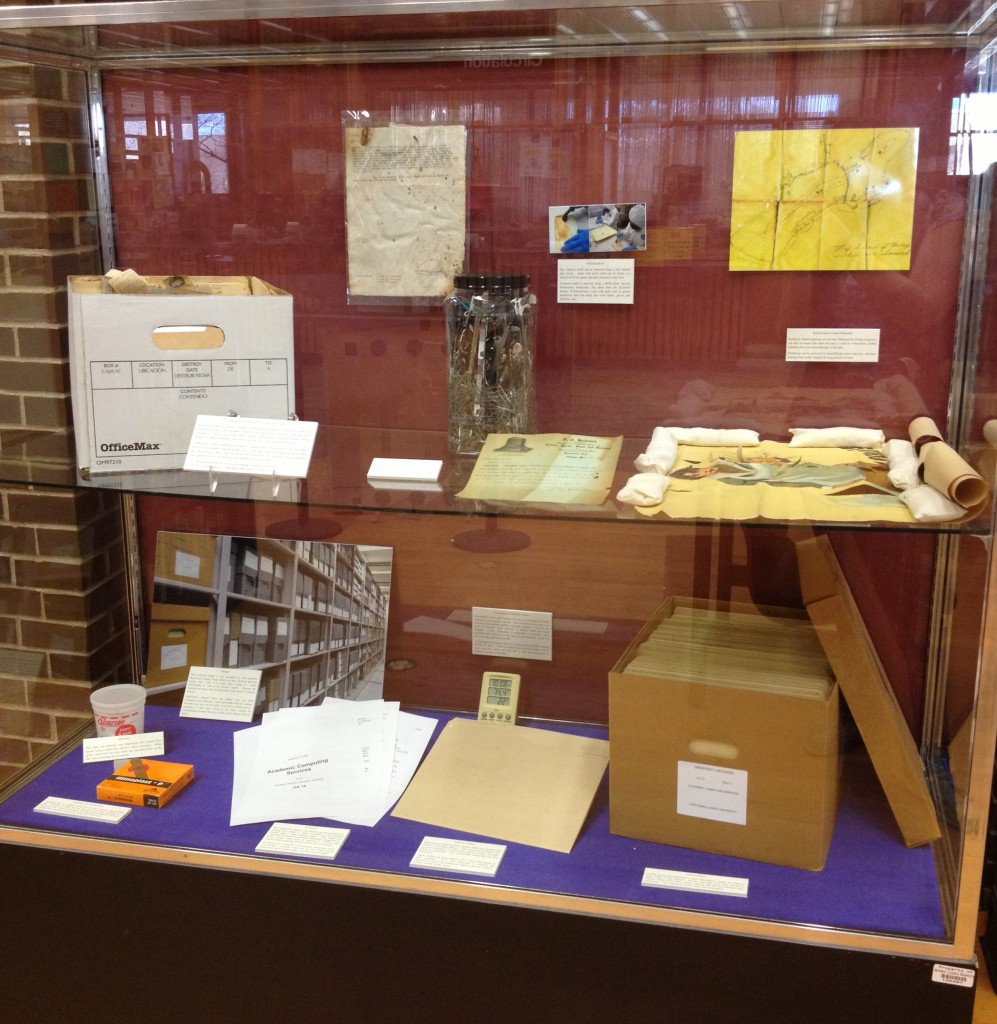

In preparation for my talk I subjected several of my colleagues to two trial runs. I wanted my overall message to be that we must make selection decisions about what we are going to “treat,” so to speak, in a preservation system. The first practice run was very objective and scholarly, but a fellow POWRR member suggested putting my “ask” right up front so the administrators would know where we were heading. A campus colleague suggested including a real example to punctuate the need.

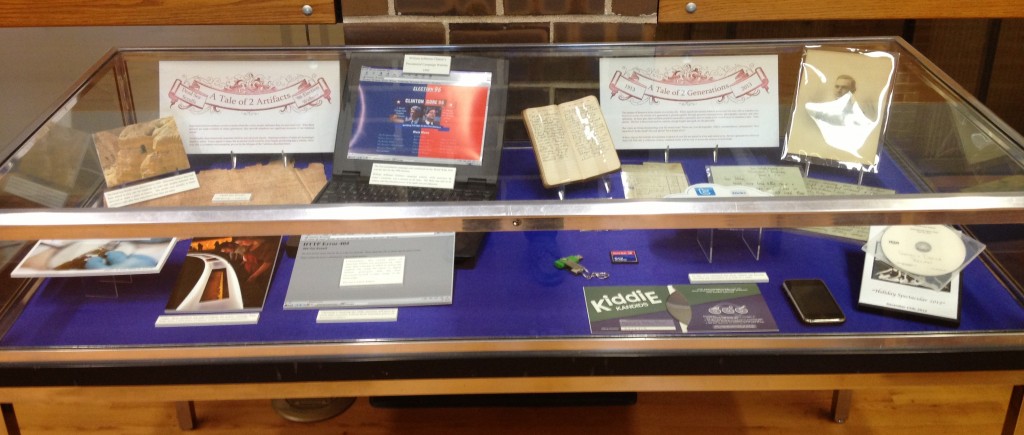

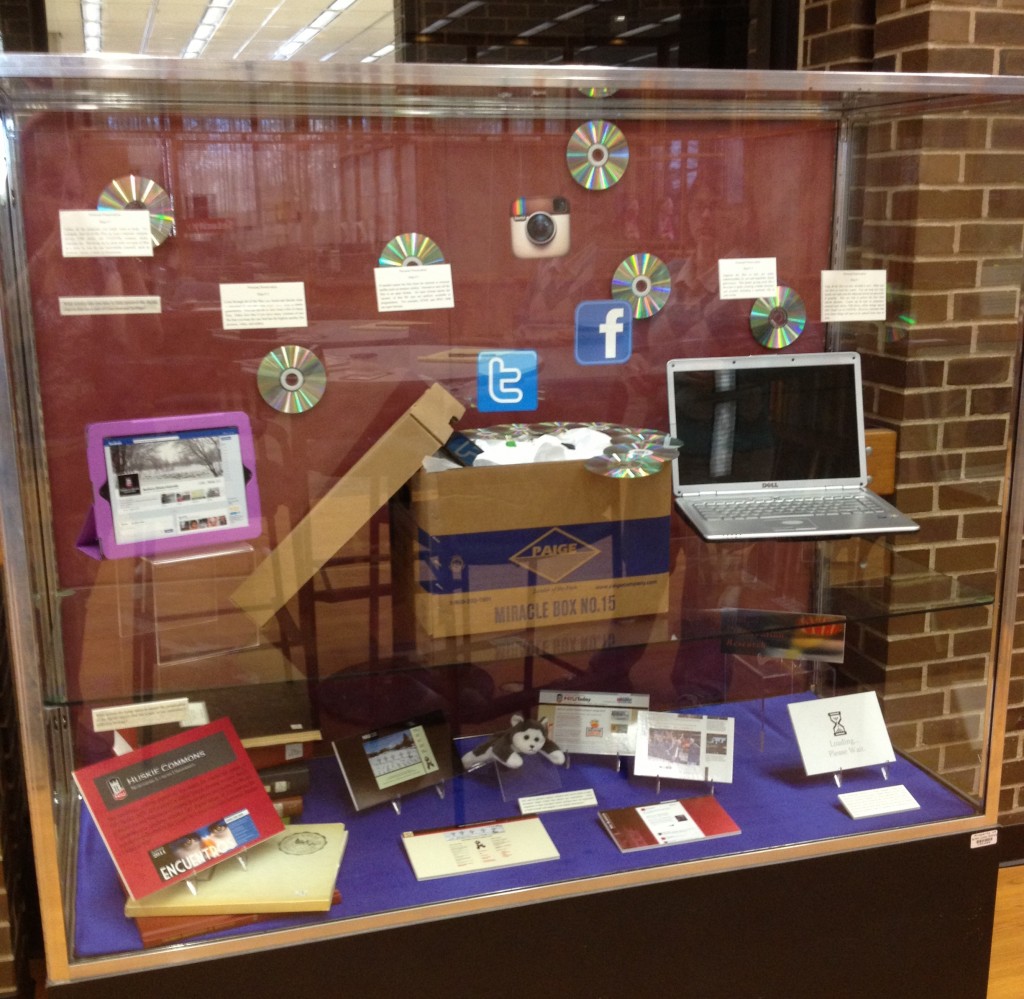

Several of the people in my focus groups have heard me talk about selection, record keeping and preservation issues on my campus for years. Their advice was to make it more visual and to personalize it with examples I’ve found that anyone on campus could relate to as I built my case. As a result, my talk had many screenshots containing examples of Web-based records practices we currently engage in that put our historical record at risk.

On presentation day, I led my audience through the difference between storage and access and how different formats create risks that concern me. I even found an article in The Economist to help emphasize how the concept of Bit Rot is finally emerging to wider audiences.

The schematic of the OAIS Reference Model elicited satisfying gasps and eased the way into an overview of our grant project, the Tool-Grid, and what I discovered in our campus conversations. I also showed a great slide that colleagues who graduated from DPOE’s Training the Trainer curriculum shared with me (planetary orbit-looking, with consecutively smaller rings). This visual of what decision-making looks like served as a suitable segue to what we can do now, long before deciding on a preservation system.

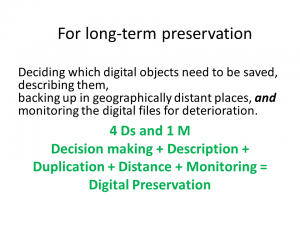

Early on in the talk I provided a distilled definition of preservation and I used it again in the closing. The long sentences we usually see didn’t really look right with all the visuals, so after a slide that distinguishes the concepts of storage and backup I came up with the following as a mnemonic device:

I’ll take the feedback I’ve been hearing as a small sign of success that at least one message resonates. When people comment they don’t remember what all of the 4Ds are, but they can usually state two of them. At least they know there is more to it than just back up! My hope is that this foot-in-the-door can be leveraged to a wider conversation on campus and actual movement towards securing our digital heritage. Time will tell!

My advice to anyone engaging with others on this topic for the first time: identify people at your institution whose advice you value to practice your message with. Make sure to mix in people who know what you’re talking about with a healthy dose of people who don’t. With this kind of feedback, we can at least feel confident that we will hit the right notes when we have a chance to close people up in a room and bend their ears.